videos on mlx

Asked on 2025-07-10

1 search

Apple's WWDC sessions provide a comprehensive overview of MLX, a framework designed for machine learning on Apple Silicon. Here are some key sessions and their highlights related to MLX:

-

Get started with MLX for Apple silicon:

- This session introduces MLX as an open-source array framework optimized for Apple Silicon. It covers the basics of using MLX in Python, including installation and basic array operations. The session also highlights MLX's key features, such as unified memory and lazy evaluation, which make it efficient for machine learning tasks on Apple devices.

-

Explore large language models on Apple silicon with MLX:

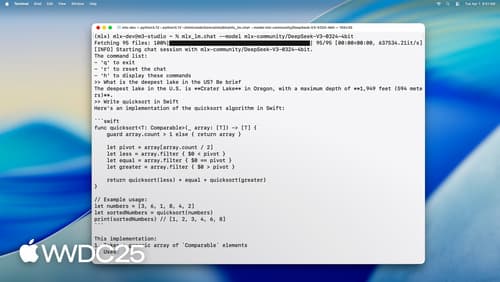

- This session focuses on using MLX for large language models on Apple Silicon. It demonstrates how MLX can perform inference and fine-tune massive models directly on a Mac. The session also introduces MLX LM, a Python library for handling large language model requirements, and discusses text generation, model quantization, and fine-tuning.

-

Train your machine learning and AI models on Apple GPUs:

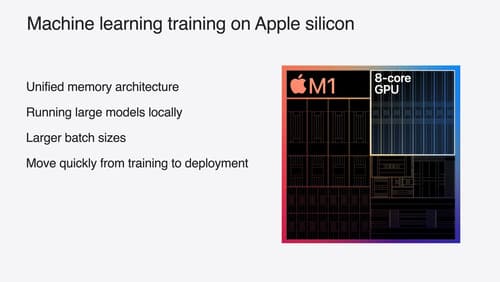

- This session discusses the integration of MLX with Apple's Metal backend, highlighting features like just-in-time compilation and a NumPy-like interface. It provides insights into how MLX is optimized for Apple GPUs, supporting distributed training and unified memory.

-

Discover machine learning & AI frameworks on Apple platforms:

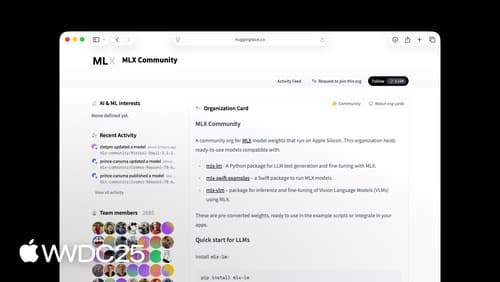

- This session provides an overview of various machine learning frameworks available on Apple platforms, including MLX. It emphasizes MLX's ability to run state-of-the-art ML inference on large language models and its integration with platforms like Hugging Face for accessing a wide range of models.

These sessions collectively showcase MLX's capabilities and its integration with Apple's hardware and software ecosystem, making it a powerful tool for machine learning and AI development on Apple devices.

Get started with MLX for Apple silicon

MLX is a flexible and efficient array framework for numerical computing and machine learning on Apple silicon. We’ll explore fundamental features including unified memory, lazy computation, and function transformations. We’ll also look at more advanced techniques for building and accelerating machine learning models across Apple’s platforms using Swift and Python APIs.

Train your machine learning and AI models on Apple GPUs

Learn how to train your models on Apple Silicon with Metal for PyTorch, JAX and TensorFlow. Take advantage of new attention operations and quantization support for improved transformer model performance on your devices.

Explore large language models on Apple silicon with MLX

Discover MLX LM – designed specifically to make working with large language models simple and efficient on Apple silicon. We’ll cover how to fine-tune and run inference on state-of-the-art large language models on your Mac, and how to seamlessly integrate them into Swift-based applications and projects.