What type of experiences are possible with the foundation model? I want to be able to classify images in a photos style app

Asked on 2025-06-12

1 search

To classify images in a photos-style app using the Foundation Models framework, you can leverage the Vision framework, which is part of Apple's suite of machine learning and AI frameworks. The Vision framework provides over 30 APIs for different types of image analysis, which can be used to understand the content of images and videos. This includes capabilities for image classification, which would be directly applicable to your use case.

The Foundation Models framework itself is optimized for tasks such as text extraction, summarization, and generating structured data, but for image classification, the Vision framework would be more appropriate. You can integrate these capabilities into your app with just a few lines of code, ensuring that all processing is done on-device, maintaining user privacy and allowing offline functionality.

For more detailed information on the Vision framework and its capabilities, you can refer to the session Discover machine learning & AI frameworks on Apple platforms.

Platforms State of the Union

Discover the newest advancements on Apple platforms.

Meet the Foundation Models framework

Learn how to tap into the on-device large language model behind Apple Intelligence! This high-level overview covers everything from guided generation for generating Swift data structures and streaming for responsive experiences, to tool calling for integrating data sources and sessions for context management. This session has no prerequisites.

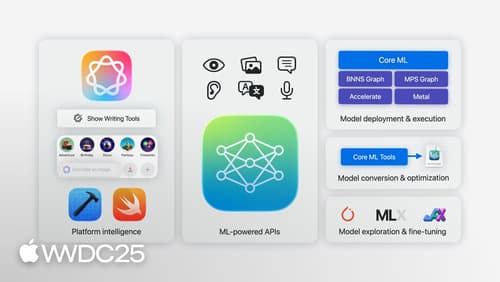

Discover machine learning & AI frameworks on Apple platforms

Tour the latest updates to machine learning and AI frameworks available on Apple platforms. Whether you are an app developer ready to tap into Apple Intelligence, an ML engineer optimizing models for on-device deployment, or an AI enthusiast exploring the frontier of what is possible, we’ll offer guidance to help select the right tools for your needs.